If an AI gained consciousness, would we know?

Maybe this question strikes you as absurd; maybe, disquieting. Either way, you’ll hear it more in coming years, as human beings develop increasingly close ties with charismatic machines trained on us.

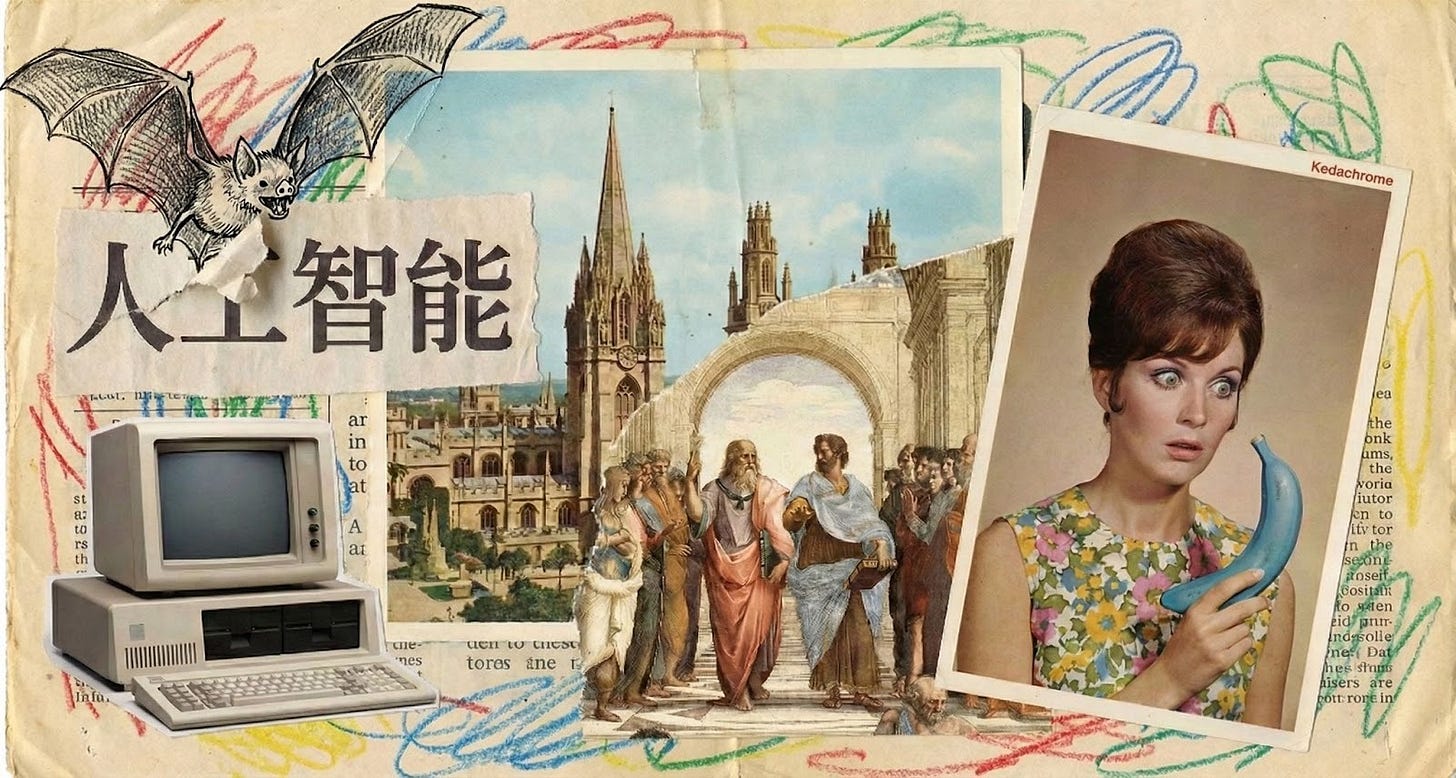

Thankfully, philosophers have pondered consciousness for about as long as philosophers have pondered anything. In recent decades, advances in computing added urgency, with leading thinkers dreaming up a range of provocative thought-experiments: a man communicating from a locked room; a woman afflicted by a blue banana; a bat with an inner life.

To explain, we are publishing this essay about key thought-experiments related to AI, written by the broadcaster and author David Edmonds, whose acclaimed books include Parfit, the recently released Death in a Shallow Pond, and a collection of philosophical essays that he edited, AI Morality. He is currently writing a book on thought-experiments.

—Tom Rachman, AI Policy Perspectives

By David Edmonds

As a young scholar in Oxford, John Searle fell in love twice. First with a fellow student, Dagmar, who became his wife, and second with philosophy. The City of Dreaming Spires was grim in the 1950s, Searle recalled, with unheated buildings and inedible food. “The British were still on wartime rationings,” he said. “You got one egg a week.”

The philosophical fare was more nourishing. Searle described the collection of philosophers in the city as “the best the world has had in one place at one time since ancient Athens.” Two giants of Oxford philosophy, Peter Strawson and J.L. Austin, were key influences on him.

Searle became fixated on one topic that, for the rest of his life, he maintained was the central puzzle for philosophy: consciousness. How was human reality and our conception of ourselves compatible with the physical world? How could beings with free will and intentionality exist? How could politics, ethics and aesthetics arise out of the “mindless, meaningless” stuff from which the physical world was constructed?

From 1959, Searle taught at Berkeley, beginning his career in what now seems a remote era of pen and paper. It wasn’t until the late 1970s that personal computers became widely available. At roughly the same time, debates around artificial intelligence gathered speed and heat.

In 1979, Searle was invited to deliver a lecture at Yale to AI researchers. He knew next-to-nothing about AI, so bought a book on the subject. This described how a computer programme had been fed a story about a man who’d gone to a restaurant, been served a burnt hamburger, and stormed out without paying. Did the man eat the hamburger? The programme correctly worked out that he had not. “They thought that showed it understood,” he commented. “I thought that was ridiculous.”

And so in 1980, Searle published a paper called “Minds, Brains, and Programs,” introducing the Chinese Room, one of several famous philosophical thought-experiments that have had a lasting impact on discussions of consciousness and AI.

It goes something like this. You are the only person in a locked room. A note is passed to you underneath the door. You recognize the characters as being Chinese, but you don’t speak Chinese. By luck, there’s a manual in the room, with instructions on how to manipulate these symbols. You follow the instructions. Without understanding the content of what you’ve written, you produce a reply that you slip back under the door. Another note arrives. With the manual, you again generate a reply.

The person on the other side of the door might have the impression that you understand Chinese. But do you? Obviously not, thought Searle. And any computer is in an analogous position. A computer is merely manipulating symbols, following instructions, he thought. Computation and understanding are not synonymous.

BATS & COLOURS

It is a striking feature of the philosophy of mind, and consciousness studies, that so much of the intellectual agenda has been driven by a small set of thought-experiments.

The Chinese Room has spawned a vast literature. Almost as famous is a paper, “What’s It Like To Be A Bat?”, that predated Searle’s by six years, written by another American philosopher, Thomas Nagel, whom Searle befriended during his Oxford years.

Like us, bats are mammals. But they have an alien way of navigating the world, echolocation. There is a subgroup of humans, chiropterologists, who know an impressive amount about bats, and have investigated how their high-frequency sounds bounce off objects, allowing them to detect size, shape and distance. But there is one thing that they don’t and can’t know, Nagel said: the subjective experience of being this creature.

“I want to know what it is like for a bat to be a bat,” he wrote. “Yet if I try to imagine this I am restricted to the resources of my own mind, and those resources are inadequate to the task.” AI was still in its infancy when Nagel wrote his article, but questions about the meaning of an artificial mind were already circulating. Could there be something that it is like to be a thinking machine?

The Australian philosopher, Frank Jackson, attacked the problem from a different angle in his 1982 article, “Epiphenomenal Qualia” (qualia being a term for the subjective aspects of conscious experience). In his Mary’s Room thought-experiment, a woman has had an unusual upbringing. Mary was raised alone, entirely in a black-and-white room: black-and-white walls, a black-and-white floor, a black-and-white TV. She has black-and-white clothes and her food, pushed under the black-and-white door, has been dyed black and white.

To stave off the tedium of her monochrome existence, Mary studies hard, and her focus is colour. She learns all about the physics and biology of colour—for example, about the wavelengths of particular colours and how they interact with the retina to stimulate experience. She even learns how colour words are used in literature, poetry and ordinary language, and how someone can “feel blue,” be “green with envy,” or so angry that “a red mist descends.” Mary becomes the world’s expert on all aspects of colour.

One day, the door to Mary’s room opens for the first time, and she joins us in our kaleidoscopic world. The first thing she sees is a ripe red apple. The question is this: When Mary sees this apple, does she learn anything?

Jackson argued—and most people presented with this scenario seem to agree—that in seeing what red actually looks like, Mary has learnt something. At the time of his article, what Jackson took this to show is that a purely physical description of the world cannot capture everything there is to know about the world. The phenomenology of experience (the redness, the what’s- it-like-to-be-a-bat-ness) cannot be fully explained with descriptions of particles and fields, electrons and neutrons, atoms and molecules.

Even if an AI could recognize a new shade of colour, such as lilac, that it had never seen before, it would not mimic human experience if it lacked lilac qualia—or so Mary’s Room might suggest. This raises the issue of how human subjectivity may be relevant to comprehension and functioning in the real world, turning a philosophical question into a technical one.

BLOCKHEADS & BANANAS

It was Jackson who gave the name “Blockhead” to a thought-experiment from the American philosopher Ned Block that appears in a 1981 paper, “Psychologism and Behaviorism.” We are to imagine there is a computer, programmed in advance so that it could respond to every possible sentence with its own plausible sentence.

This was in part a response to the famous test of machine intelligence that Alan Turing set in 1950. A computer passed the Turing test if it could converse with a human, and the human could not identify it as a machine. The Blockhead machine would pass the Turing test yet is self-evidently not intelligent.

Today’s LLMs could fool us into believing that we are engaging with humans. But, much as Searle contended in the Chinese Room that manipulating symbols is insufficient for understanding, Block argued that behaving identically to an intelligent entity is insufficient to demonstrate intelligence or mental states. The lesson we might take from these, along with the Nagel and Jackson thought-experiments, is that AI would lack fundamental features of human consciousness.

Daniel Dennett, on the other hand, thought it was at least conceivable that AI could be conscious. With his lumbering bulk and Santa Claus beard, Dennett was an unmistakable figure in the philosophical world. He coined the term “intuition pump” as an explanation for how thought-experiments functioned. Pumping our intuitions can be helpful, he believed, but they can also mislead. What we need is to examine how the pump operates, he said, to “turn all the knobs, see how they work, take them apart.”

A thought-experiment for which he had particular loathing was the Chinese Room. He argued that its principal error was to portray language as akin to instructions. But for a computer to master a language would take millions and millions of lines of code. And, though we might say that the man alone in the room doesn’t understand, perhaps the system as a whole does.

Dennett felt that Mary’s Room had similarly hoodwinked us. To expose this, he presented another thought-experiment. Mary is as before, an unusual woman whose life has been led entirely in monochrome, until the day when the door opens. But this time, he wrote:

As a trick, they prepared a bright blue banana to present as her first colour experience ever. Mary took one look at it and said, “Hey! You tried to trick me! Bananas are yellow, but this one is blue!” Her captors were dumbfounded. How did she do it? “Simple,” she replied. “You have to remember that I know everything—absolutely everything—that could ever be known about the physical causes and effects of colour vision. So of course before you brought the banana in, I had already written down, in exquisite detail, exactly what physical impression a yellow object or a blue object (or a green object, etc.) would make on my nervous system.”

Mary is the world expert on colour, so why wouldn’t she spot such an obvious deceit? Dennett argued that the idea that we had feelings, thoughts and desires that were resistant to an objective, external, physicalist analysis was mistaken. In that sense, “qualia” were a mirage, a kind of useful fiction. If we do away with this fiction, then a major barrier vanishes to building AI that’s like a human in most important respects.

The AI researcher Blaise Agüera y Arcas has argued that in theory (and increasingly in practice) there is no significant distinction between a human and machine reaction to so-called qualia. “So many food, wine, and coffee nerds have written in exhaustive (and exhausting) detail about their olfactory experiences that the relevant perceptual map is already latent in large language models. … In effect, large language models do have noses: ours.”

AVOIDING TWO BAD OUTCOMES

The enduring fascination with thought-experiments in the AI era—and the intensity of the disputes that they provoke—reflect how much is at stake. While these questions are important for morality, they could become more than theory.

“The importance of the dispute over AI welfare can be understood in terms of the avoidance of two bad outcomes: under-attributing and over-attributing welfare to AIs,” the philosophers Geoff Keeling and Winnie Street explain in their forthcoming book Emerging Questions in AI Welfare.

“On one hand, failing to register that AIs are welfare subjects when AIs are in fact welfare subjects is bad because it could lead to unintentional mistreatment of AIs or the neglect of the needs of AIs, potentially resulting in large-scale suffering,” they write. “On the other hand, over-attributing welfare to AIs is problematic because resource allocation decisions for promoting the (potential) welfare of different kinds of entities—including humans, non-human animals and AIs—are often zero-sum.”

In other words, the efforts and resources you invest in AI welfare mean less for people and animals.

To manage this quandary, Keeling and Street propose three parallel projects. First, there is a philosophical project, in which we consider which forms of AI could be candidates for welfare. Is it the underlying AI model? Or the system built atop? Or would it be specific agents? Second, there is a scientific project, in which we establish methodologies to detect factors such as consciousness. Thirdly, there is a democratic project of versing the public in the complex issues that await.

Once this future engulfs us, thought-experiments about machine consciousness could move beyond speculation. The “experiments” would be active, while the participants would be humanity itself—and perhaps other beings besides.

This is really useful, thank you. I feel there is an interesting parallel between our use of historical analogies (AI has variously been compared to industrial revolution/ the internet/ the atom bomb) to help us locate the potential impact of AI and the growing use of philosophical thought experiments.

It's becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman's Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with only primary consciousness will probably have to come first.

What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990's and 2000's. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I've encountered is anywhere near as convincing.

I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there's lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order.

My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar's lab at UC Irvine, possibly. Dr. Edelman's roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461, and here is a video of Jeff Krichmar talking about some of the Darwin automata, https://www.youtube.com/watch?v=J7Uh9phc1Ow