Today’s post comes from Harry Law, who writes about AI and society at Learning From Examples. It is inspired by the (almost daily) challenge of seeing a new survey about public attitudes to AI and trying to understand what the results mean. This blog is based on a more extensive report co-authored with GovAI.

In 1953, President Dwight Eisenhower gave a speech to remember. In front of the UN General Assembly, he proposed a new international body to regulate and promote the peaceful use of nuclear power. Now known as the Atoms for Peace address, Eisenhower attempted to balance fears of nuclear proliferation with hopes for the peaceful use of uranium in nuclear reactors:

‘The more important responsibility of this atomic energy agency would be to devise methods whereby this fissionable material would be allocated to serve the peaceful pursuits of mankind.’

Ike’s speech was about winning hearts and minds at home as much as securing America’s position abroad. The promise of limitless clean energy for the home, fantastic new technologies, and novel ways to travel represented the optimism of the nuclear age. The following year, Lewis Strauss, chairman of the US Atomic Energy Commission, famously said of the introduction of atomic energy: ‘It is not too much to expect that our children will enjoy in their homes electrical energy too cheap to meter’.

But it wasn’t to be. US and European opinion soured on nuclear technology after high-profile accidents at Chernobyl and Three Mile Island. While countries like France managed to keep its reactors online, states like the UK effectively outlawed the technology by making planning impossibly difficult and abiding by dodgy science about safety standards. No prizes for guessing which country has cheaper energy. Chernobyl likely resulted in thousands of deaths, but the data today tells us that nuclear power is among the safest of all energy sources. The primary result of the backlash was higher electricity prices and more CO2.

Whether or not a technology works is only half the battle. You also need the public to want it to work. Take genetically modified organisms. In 1978, Genentech successfully spliced a human gene into E.coli to synthesise insulin, igniting a wave of optimism among scientists. But almost a decade later, the public protested when bacteria modified to reduce the formation of ice became the first GMO to be released into US fields. Scientists spoke of new crops resistant to drought, floods and pests, but the public worried that companies were only interested in engineering herbicide-tolerant crops, so that they could sell more herbicide.

Concern about ‘Frankencrops’ took on a life of its own, blending ethical unease with fears of corporate control and ecological disruption. Particularly in Europe, but also beyond, this deep cultural mistrust led to activist groups, tougher regulation, and the rise of ‘natural’ alternatives. When added to the costs and complexity that genetic engineering experiments already faced, promising innovations were blocked, slowed or discarded. This had the unfortunate consequence of lowering the ceiling on the global agricultural supply.

There are tentative signs of a change in direction. In 2025, global nuclear power generation should inch towards a historic high, and the number of new reactors under construction is growing. GM-crops are also ticking up, particularly in low- and middle-income countries. But governments are moving cautiously, as consultations make clear that a delta remains between scientific support and public caution. Consider the Philippines. In 2021, the government approved the cultivation of golden rice, engineered to contain beta-carotene, to help prevent blindness, after a process spanning more than 20 years. In 2024, environmentalists convinced the courts to ban it.

For AI, the parallels are imperfect but useful.

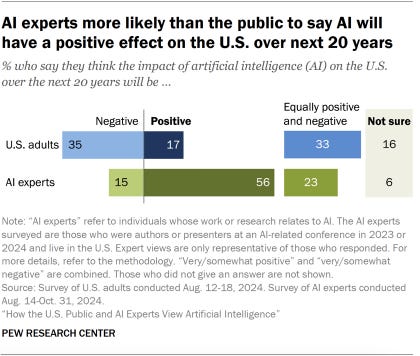

First, experts are generally more positive about AI than the general public. Like nuclear power, AI comes with safety risks that some fear may be catastrophic. Like GMOs, large corporations play a central role. As with GMOs, it’s also not hard to imagine members of the public viewing artificial intelligence as inherently ‘unnatural’ - a slippery term that people often use to oppose new technologies, and which captures a broad range of values, anxieties and beliefs.

Equally, AI is clearly different to nuclear power, GMOs, or other technologies that have struggled with public perceptions, like supersonic flights. Most obviously, millions of people knowingly use AI every day, to draft emails, help with homework, advance science, or even for companionship. What does that mean for the public’s attitudes to the technology? A new cottage industry has emerged to puzzle out this question. Each week a new survey is released. One says that people are deeply distrusting of AI while another shows their use of it increasing rapidly. Taken together, the results are hard to parse. Are people scared, hopeful, or both? Do they even understand what AI is? And what, crucially, are AI labs or policymakers supposed to do in response?

In the remainder of the post, I (a) introduce a mental model for how to think about public attitudes to AI; (b) make 15 claims I think are true about how people currently view the AI project, and (c) propose a new Global AI Attitudes Survey.

Is all this necessary? In a strict sense, labs don’t need to understand public opinion to build and deploy advanced AI systems, while policymakers can craft rules without knowing precisely what the public thinks. But if the public’s concerns — about control, the environment, long-term risk — are dismissed as ignorance, then you can bet AI’s adoption will suffer. When people think that a technology offends their values, legitimacy collapses. It doesn’t matter how good your model is if no-one wants to use it. Nuclear power and GMOs both worked on paper, but were derailed when the social licence was revoked. There is no reason to think that AI is different.

Of course, there is no guarantee that what the public says they want is what is best for the technology. As with any kind of public engagement, there will always be a question about how much policymakers and AI labs can, or should, follow public attitudes (versus trying to shape them). But these questions are premised on being able to understand what the public actually thinks about AI in the first place.

What do I mean by public attitudes to AI?

Anybody hoping to measure, shape or respond to public attitudes to AI needs to consider (at least) five foundational questions.

1. Who are you interested in?: Public attitudes to AI diverge as soon as we break the public into groups, such as more savvy users of the technology versus those who know little about it. With GMOs, the attitudes of farmers and environmental groups have been central to its successes and failures. As a general purpose technology, AI will have a much broader range of parties with distinct views, from teachers to musicians.

2. Where are they from?: Today, much of the global AI conversation is mediated by voices and polling data from English-language sources in the West. This creates a skewed picture of public attitudes to AI because the West is generally more negative than elsewhere.

3. What attitudes are you measuring?: ‘Public attitudes’ to AI is not a singular concept, but a multi-dimensional one. A cognitive dimension examines what people know and believe about AI and how it functions. An affective dimension captures their emotional responses to it, like fear, excitement, anxiety, hope, or trust. A behavioural dimension captures how people adopt, reject, or use AI systems in practice.

4. What is meant by ‘AI’?: Public attitudes to AI are never free-floating. People will respond differently if you ask about an abstracted version of the technology, specific AI applications, more advanced concepts like ‘AGI’, or the organisations involved. This is why attitudes can seem contradictory: someone might welcome AI in healthcare but reject it in policing, or trust a firm to develop a certain AI application, but not another.

5. What drivers are you interested in?: Many surveys capture less about AI and more about the underlying drivers that motivate people. Demographics, socioeconomic status, and an individual’s broader values and optimism influence not just how much they know about AI, but whether they view it as a threat, a tool, or a distant abstraction.

15 claims about public attitudes to AI

With colleagues, I reviewed ~100 surveys to try to better understand public attitudes to AI. Based on this data, I created 15 claims that I think are currently true with varying degrees of confidence. As I expand on below, surveys are an imperfect tool and my sample is limited to English-language sources. Attitudes are also fluid. So take these claims with a pinch of salt. But I think converting survey data to claims – using a good deal of intuition – is necessary to ensure that we are drawing insight from this data, to identify open questions worthy of further study, and to provide falsifiable yardsticks for future surveys to tackle.

Awareness and use: The general public’s awareness of AI grew slowly over the past decade but increased rapidly since 2022 when they began knowingly, rather than passively, using AI in greater numbers. Within countries, awareness and use is highest among young, high-income, tech-savvy men, although the AI gender gap is narrowing. Globally, awareness and use of AI among Internet users is highest in certain lower/middle-income countries like Indonesia and India.

Sentiments: In the West, a majority of the public is more negative, than positive, about AI. Elsewhere, in countries like UAE, Nigeria, and Japan, attitudes are more positive, for varied reasons. For example, Gillian Tett attributes Japan’s relative positivity about AI to its labour shortage and wariness around immigration, its history of more positive sci-fi (Astro Boy vs. 2001: A Space Odyssey), and its Shinto philosophy which avoids strict boundaries between animate and inanimate objects.

Knowledge: A majority of people are relatively confident in their knowledge about AI, but, when prompted, display clear knowledge gaps, including a lack of awareness of AI’s presence in everyday technologies. As people learn more about AI, they typically become more excited about it, overall, but also more worried about specific risks, like those connected to autonomous weapons or hiring.

Age: Younger people tend to be more accepting of AI, in both personal and professional contexts, from financial planning to relationships. They are also more likely to view AI favourably, compared to the human alternatives on offer. However, young people also worry more about certain risks from AI, such as its environmental impact, and are generally more invested in the need for AI regulation.

Income: Within countries, lower-income adults tend to be more anxious that AI will cost them their jobs or be used to monitor them. Higher-income adults are more likely to see AI as a beneficial productivity booster.

Political affiliation: Political affiliation is typically not a very reliable guide to overall attitudes to AI. In more polarised climates, like the US, those supporting the government tend to have more confidence in its ability to regulate AI, and vice versa.

AI applications vs ‘AI’ overall: People’s attitudes towards a representative basket of specific AI applications is more positive than their view of ‘AI’, abstracted.

Most popular applications: People show greatest support for AI applications that could help achieve universally-popular outcomes (detect cancer); are personally important to them (improve the environment); or tackle a daily problem they are facing (e.g. find information, plan travel). People focus less on the suitability or additive value that AI, specifically, might bring to these applications.

Public vs. experts: While experts tend to be more optimistic about AI than the general public, much of the public is very supportive of certain AI applications that AI ethicists worry about, such as the use of AI to detect crime or in border control.

Most salient risks: People worry most about risks that are framed as a potential personal loss to them (e.g. job loss, data breaches) as well as those that inspire vivid, intense fear or revulsion (e.g. bioweapons, brain-rot, child abuse).

Unemployment: When asked to reflect on how AI may affect society, people frequently rate the loss of jobs as one of their top concerns. However, higher-income employees are less likely to view their own job as being at risk from AI.

Explainability: The public often does not expect or receive explanations in other consequential domains, such as from juries in court cases. Conversely, the public views the opacity of modern deep learning systems as a major risk, and many are willing to sacrifice some accuracy for more explainability. As people use AI systems more, and the models become increasingly reliable, these demands for explainability will likely temper.

Catastrophic risks: When prompted, people show high rates of concern about catastrophic risks from AI such as cyberattacks, bioweapons, and loss of control. However, the public worries less about these risks than other, non-AI, catastrophic risks, like nuclear war and climate change. In general, the public worries relatively little about catastrophic risks (AI-related or otherwise), compared to everyday issues like the cost of living and immigration.

Regulation: When asked, a majority of the public supports AI regulation. This support remains broadly consistent, across different forms, such as requiring safety evaluations or licensing. Most people do not trust AI companies to develop AI without some regulation and oversight.

International cooperation: The public generally supports more international AI governance, such as more international enforcement mechanisms or international treaties. To date, most of the public has generally not accepted the ‘arms race’ argument that Western countries should accelerate AI to outpace China.

Caveats, Caveats: The limits of survey data

The claims above are based on survey data. I think there is good evidence for them, but surveys also have clear limitations as a guide to public attitudes. Many of the challenges are long-standing and can be mitigated, in part, with the right methods and resources. But it is also ever harder to convince a representative set of people to take part in surveys, whether due to call or popup blockers, survey fatigue, or a declining sense of civic duty.

Noise: Public attitudes are noisy. This is most famously illustrated by the Lizardman’s Constant, which originates from a reference to the fraction of people in any given poll who give unexpected or bizarre answers. For AI, this means we should always expect some proportion of survey data to be junk.

Question wording and order: Context effects mean that people can answer a question differently due to how the questions are constructed and sequenced. If you ask people a series of questions about risks from AI, followed by a question about whether they support ‘AI regulation’, respondents may respond more positively to the latter. The specific wording also matters. People view a ‘death tax’ differently to an ‘estate tax’ and may also respond differently when asked about AI ‘regulation’ vs ‘red tape’, ‘bureaucracy’, or ‘report writing’, even if the underlying idea is the same.

Oversimplification: Surveys are limited in how much detail they can provide or ask. As a complex general-purpose technology, this is a particular challenge for AI. For example, the public is often asked whether they ‘trust’ AI, or who they trust to develop it. But trust is context-dependent. People may trust certain government bodies to oversee certain healthcare AI apps, but trust companies to develop the best language translation model. People’s trust may depend on how the underlying data is collected or stored. Without the right context, when a person says that they ‘trust’ an AI company or person, they are mostly saying that they either know who they are and/or like them.

Social desirability: Social desirability bias represents the gap between what people genuinely think and what they report in surveys, due to societal norms and a desire for approval. Similarly, people often express an opinion in a poll to avoid saying ‘I don’t know’. This may explain why people generally overestimate their familiarity with AI or why they will happily share views on the merits of regulatory proposals that many AI policy professionals may struggle to fully understand.

What next? A Global AI Attitudes Report

To avoid the legitimacy collapse seen with nuclear power and GMOs, AI labs and policymakers need to better understand what the public thinks and feels. Surveys are not the only option. Focus groups, citizen juries, and vignette studies could pose richer scenarios, but they tend to trade off depth for breadth, are harder to repeat, and come with their own biases. Another approach would be to focus less on what people say they think about AI and more on what their use of AI reveals about their attitudes. For example, practitioners can use privacy-preserving methods to analyse how people are querying LLMs or use experiments to understand the aspects of an AI tool that people most value (or are put off by).

All of these methods have their merits. But I think surveys should remain a cornerstone of the approach. They promise not just scale, but the chance to compare attitudes across topics, groups, and time. The current landscape of largely one-off surveys, infused by each sponsor’s own view and biases, and academic side-projects, is insufficient. What is required is a serious, longitudinal tracking effort: a living global survey of public attitudes to AI.

Some governments, like the UK, already conduct regular AI attitudes surveys, as do firms like KPMG. A next step could see interested parties from governments, AI labs, industry bodies, and survey experts create a shared map of how attitudes evolve across time, cultures, and domains. Such a programme, which we might call the Global AI Attitudes Report, could repeatedly ask the same people (or highly comparable cohorts) the same core questions. This would help to separate short-term fluctuations — like a spike in concern after a viral deepfake — from more durable shifts in public values. The model here might be something akin to the British Social Attitudes Survey or the World Values Survey. But to be useful for AI, this programme would need to move faster and look wider (though we know this is possible given the success of the International AI Safety Report).

We don’t currently know what questions we should be asking about AI in five years time, or even two years time. That means the Survey should have scope to add new questions, for example based on trends in how people and enterprises are using AI, and predicted capability shifts. The primary goal should be to deliver new data and revised claims, but the project could also provide standardised survey resources for other developers to draw on.

Key components of the new Global AI Attitudes Report could include:

A standardised question bank: The Global AI Attitudes Report should build on initial efforts to create a shared set of vetted, carefully sequenced questions, and provide guidance for how to structure questionnaires.

AI archetypes: Rather than grouping respondents by age, gender, and income, the Survey could build and test richer archetypes of people with common attitudes to AI.

Trade-offs: Attitudes toward ‘AI’ in the abstract reveal little, the Global AI Attitudes Report could probe views on concrete applications — from AI tutors in schools to AI diagnosticians in hospitals — and weave in characteristics and design choices that force the public to take a stance on the inevitable trade-offs that AI poses. Similarly, rather than asking the public if they support AI regulation, in general, the Report could ask trade-off questions like: “Would you support holding AI companies legally liable for chatbots’ financial and medical advice, if it meant they had to restrict free public access to these queries, to limit liability risks?”

Openness and global representation: The Global AI Attitudes Report should serve as a shared public good, with the full results made public and the methods transparent. To be credible, the effort must include representative samples from across the world to identify both universal values and points of cultural divergence.

AI-powered methods: It would be a missed opportunity not to use AI to help measure attitudes to AI. Academics are already using AI to help test survey questions and interpolate missing data. From Camden to Kentucky, experiments are underway to use AI to inform new kinds of public deliberation. The Report could provide a testing ground for evaluating the usefulness of AI itself to such endeavours.

With thanks to Noemi Dreksler, Chloe Ahn, Daniel S. Schiff, Kaylyn Jackson Schiff, Zachary Peskowitz, Conor Griffin, Julian Jacobs, Steph Parrott, Haydn Belfield, and Tom Rachman.

We're building a version of this over at Collective Intelligence Project; would love to chat with you about this!

Popular culture—and I am clubbing religion under the same roof—also plays such an important role when it comes to technology. I grew up in a Christian-majority state with Christian education; I can vividly recollect that in 6th grade, a teacher of mine was talking about GMOs and plastic surgery: she told us mankind was playing with creation of God. I thought about it for days. Mind you, this was a period in that school when we were asked to refrain from playing games like DOTA or listening to bands like KISS - your usual mark of the beast panic era (in retrospect, this was probably because kids were joining secessionist groups or getting into hard drugs). Then there is also this passive reinforcement: you watch an American TV show where the protagonist is travelling to Europe, and the first comment usually is: "Tomatoes don't taste like anything back in America," it is implicit that this is because of GMO produce.

As an adult, I have a higher scientific tolerance and temperament; my attitude towards GMOs is the same as GLP-1 drugs: if it's going to help mankind, if it's scientifically safe, and will do maximum good, why not use it? I live in a region where people lose their livelihood every year due to floods - providing them a dignified living should be the goal. But I am also living in a region where food safety standards and drug regulations are not stringent. Anybody could package anything as organic and sell it in the farmers' market on Sundays, while presenting it as a small business. All this to say, when rich continents like Europe outright ban something like GMOs, it tends to have a cascading effect. When I am buying something in India, I look up if it is US FDA approved or matches European standards, and when I see such negative perception toward GMOs, despite my scientific tolerance, my gut says otherwise.

Side note: 2001: A Space Odyssey is such a beautiful movie :)