You want to explore how advanced AI systems, when applied to science research, might impact the world, through 2030. Do you:

Publish a report with trends and forecasts?

Host a roundtable discussion?

Convene a 5-hour roleplaying workshop built around serious gaming?

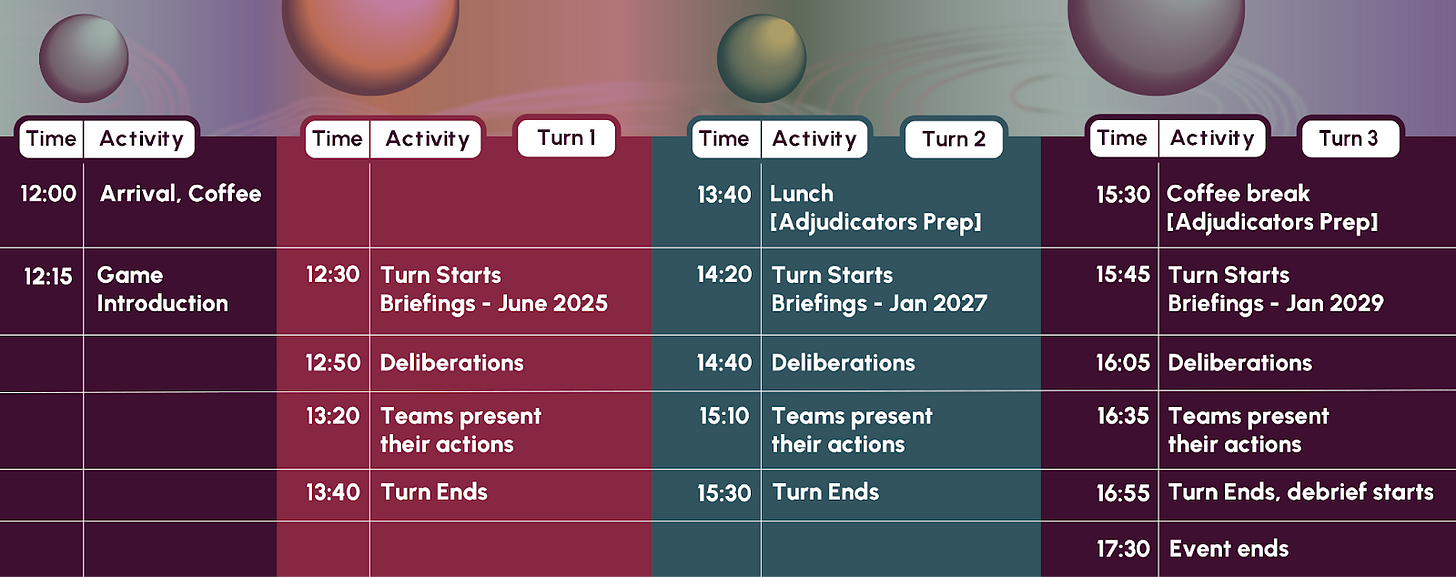

In this piece, Zoë Brammer and Ankur Vora from Google DeepMind, and Anine Andresen and Shahar Avin from Technology Strategy Roleplay, explain why they chose option C, and introduce Science 2030, a new foresight game. The authors explore lessons from a pilot with 30 experts from government, tech companies and the science community, explain why games are a useful foresight tool, and share some challenges in designing them.

The success of AI governance efforts will largely rest on foresight, or the ability of AI labs, policymakers and others to identify, assess and prepare for divergent AI scenarios. Traditional governance tools like policy papers, roundtables, or government RFIs have their place, but are often too slow or vague for a technology as fast-advancing, general-purpose, and uncertain as AI. Data-driven forecasts and predictions, such as those developed by Epoch AI and Metaculus, and vivid scenarios such as those painted by AI 2027, are one component of what is needed. Still, even these methods don’t force participants to grapple with the messiness of human decision-making in such scenarios.

Why games? Why science?

In Art of Wargaming, Peter Perla tells us that strategic wargames began in earnest in the early 19th century, when Baron von Reisswitz and his son developed a tabletop exercise to teach the Prussian General Staff about military strategy in dynamic, uncertain environments. Today, ‘serious games’ remain best known in military and security domains, but they are used everywhere from education to business strategy.

In recent years, Technology Strategy Roleplay, a charity organisation, has pioneered the application of serious games to AI governance. TSR’s Intelligence Rising game simulates the paths by which AI capabilities and risks might take shape, and invites decision-makers to role-play the incentives, tensions and trade-offs that result. To date, more than 250 participants from governments, tech firms, think tanks and beyond have taken part.

Building on this example, we at Google DeepMind wanted to co-design a game to explore how AI may affect science and society. Why? As we outlined in a past essay, we believe that the application of AI to science could be its most consequential. As a domain, science also aligns nicely with the five criteria that successful games require, as outlined in a past paper by TSR’s Shahar Avin and colleagues:

Many actors must work together: Scientific progress rests on the interplay between policymakers, funders, academic researchers, corporate labs, and others. Their varying incentives, timelines, and ethical frameworks naturally lead to tensions that games are well-placed to explore.

The option space is ambiguous: As organisations pursue and respond to scientific advances, there are many questions and few clear answers, making open-ended role-play ideal. Should a lab open-source a sensitive discovery, withhold it, or try to find a middle ground? Who and what should governments fund, and how much can they experiment in this?

Outcomes depend on multiple decisions: As actors make decisions, from launching research programmes to funding consequential datasets, this can kick-off a cascade of downstream effects that are difficult to forecast, but which can be powerfully simulated through role-play.

Large changes are possible: In our past essay, we argued that AI could transform science by helping to tackle various challenges that are hindering scientists today, such as the ever-growing literature base and the increasing cost and complexity of experiments. As Shahar and colleagues explain, when such large, transformative shifts are expected to occur, extrapolation from historical trends can quickly become inadequate, making scenarios and role-play more useful.

Conflict is likely and instructive: With multiple actors with different goals, conflict about how to use AI in science is inevitable. Questions of prioritisation, resource allocation, process, culture, risks, ethics, and even what qualifies as ‘science’ will arise. This is not a drawback for a simulation: In fact, this ‘oppositional friction’ is essential to generating the kind of creative strategies and ‘edge-case’ scenarios that traditional forecasting methods might miss.

Setting the Stage: How does Science 2030 work?

We designed Science 2030 around four parameters: teams, timeline, action space, and assessment.

1. The teams

Each player represented a role in one of three camps: governments, companies, or the scientific community. For the government teams, we deliberately chose three ‘middle powers’ - the UK, France and Canada - so that players would have to navigate a world shaped by the US and China. To capture the dynamics within governments, we assigned each team a principal, a national security advisor, a science and technology advisor and an economic advisor, whose interests may naturally clash.

The company teams also embodied conflicting archetypes: a large tech company, an industrial conglomerate, and a fast-growing startup, all working to advance the use of AI in science while attending to their bottom line, image and impact on society. The scientific community teams were represented by a range of influential voices, including leading university researchers, philanthropists, and science communicators. Behind-the-scenes, an adjudication team acted as storytellers and referees.

2. The timeline

The game unfolded over a simulated five years, from 2025 to 2030: close enough to feel tangible and force players to grapple with today’s institutional realities and constraints, yet far enough away to allow for major breakthroughs and societal shifts. Each turn pushed the clock forward by one or two years. Before each turn, our adjudication team set the scene through a “state of the world” presentation, including the latest updates on the state of frontier AI development and AI for science technologies, as well as updates based on the decisions that players had taken in earlier turns. For example, the UK and Canada chose to partner on developing sovereign high-performance computing projects in turn 1, but Canada also developed a complimentary National Data Library and a streamlined approach to governing these resources, and so the UK found itself lagging behind Canada on certain metrics in turn 2.

3. The action space

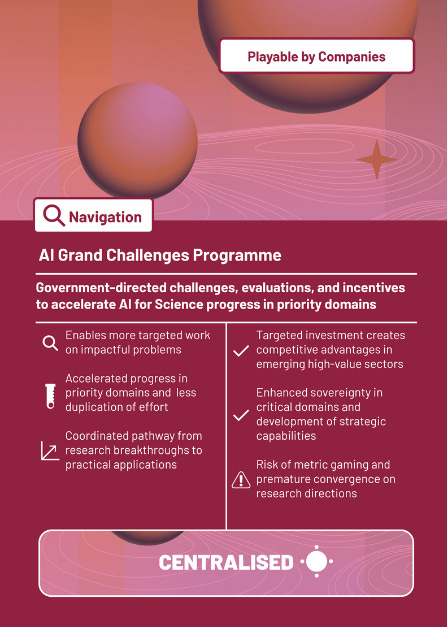

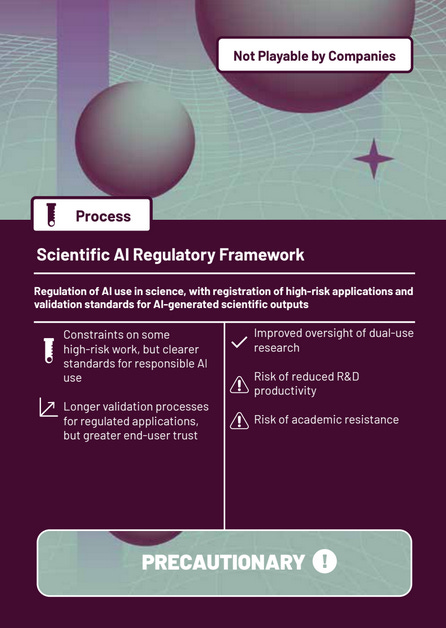

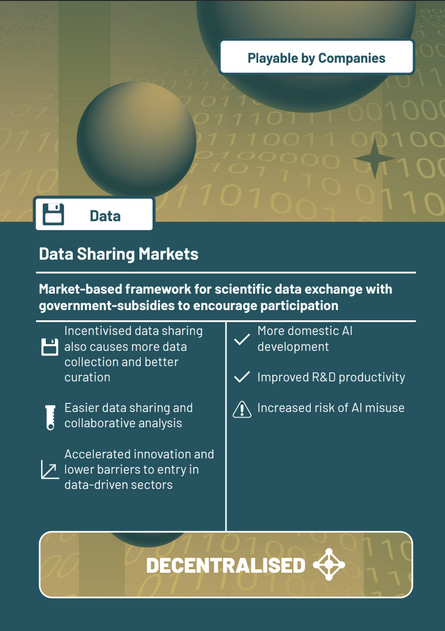

What did the players actually do? Our past essay identified a set of ‘ingredients’ that are necessary for the successful use of AI in science, such as datasets, compute, and clear research strategies. Based on this, we developed a set of 45 policy cards, outlining different interventions such as an AI grand challenges programme or new markets for sharing scientific data. Each round, the governments had to play 3 policy cards from 15 options. Companies also had to pick a policy card (e.g. offering compute credits). The interventions forced teams to weigh the merits of different strategic approaches, such as centralisation vs decentralisation, or precautionary vs acceleration. The scientific community could not directly pursue interventions, but could influence governments and companies, who in turn could collaborate, or not.

4. Assessment

At the start of the game, we told players that we would use a set of metrics to measure each team’s progress, for example on prosperity, scientific prestige, social cohesion and national security for government teams, and headcount and degree of automation for company teams. After each turn, our adjudication team updated these metrics and shared them with the full group in the “state of the world” presentation. This way, the metrics helped teams track their status and choose their future actions.

We told players that all their actions would be considered as a whole, and that we would not necessarily specify the direct consequences of every action - a resolution approach called “net assessment” inspired by wargames run by TSR’s collaborator i3 Gen. For example, after pursuing a bullish, growth-focused strategy and without articulating any clear safeguards in turn 1, the UK saw a drop in its national security metric due to the heightened possibility of AI misuse at the start of turn 2.

We kept the adjudication process deliberately opaque, to reflect the fact that real-world actions usually involve uncertainty about consequences, and to ensure that players did not get caught up in trying to game the metrics to ‘win the game’. We also told players that their actions would be adjudicated more favourably if they had more support from other players, if their actions built on actions from previous turns, and if they presented compelling narratives when communicating their decisions to the room.

Insights from our first playtest

It is too early to draw firm conclusions on the future of AI in science from our first playtest, but it did yield some notable insights, particularly on the merits of games as a foresight methodology. All participants said the exercise was worth their time, 85% said they had gained a deeper understanding of future AI scenarios, and a similar share said the format yielded deeper insights than workshops or reports. Our first playtest also hinted at deeper insights that might emerge from playing the game at scale, and design changes to consider.

Coherence vs path dependency

At the end of the playtest, the facilitation team asked a simple question: which country would players choose to live in? An overwhelming majority felt the choice was easy: Canada. Why? In turn 1, team Canada began with some foundational policies, including a national data library, tax incentives for AI adoption and sovereign compute for priority projects. This evolved over subsequent turns into a coherent, collaborative approach that prioritised safety.

By contrast, team France began with a moderate state-led industrial policy that escalated into an aggressive pursuit of state control over AI development. This created a future that was perceived as volatile and authoritarian, with the state ultimately nationalising compute and AI companies. Team UK also followed an unpredictable path, beginning with a pragmatic, growth-focused strategy before pivoting throughout the game towards a seemingly disconnected set of grand, populist policies like AI as a human right and a unilateral sovereign AGI project. This raises a broader question: In a rapidly evolving tech landscape, how do you retain flexibility and avoid harmful path dependency, while still pursuing a strategy that is sufficiently stable and coherent?

Imagining radically different futures

In the first turn, most government teams selected relatively familiar, grounded policies, such as a national data library or advanced purchase commitments for AI applications. However, by the final turn they were opting for more radical ideas such as a UK/Canadian citizen compute entitlement and a UK initiative to guarantee AI as a human right for all citizens. This was partly in response to new technology developments, which raised the stakes. For example, after ignoring more precautionary policies in turn 1, the imminent feasibility of developing AGI led Canada to make a mandatory compute off-switch its flagship policy in turn 3, in part as a reaction to the pressures of the scientific community, who stated that they “absolutely need to see that“. Similarly, France chose to nationalise AI companies in turn 3 to “address citizen concerns”. By immersing players directly into a rapidly-accelerating future, the game forced them to move beyond more incremental policy tools and grapple with the bolder interventions that the future evolution of AI might demand.

Decision-making in the fog

During the debrief session at the end of the game, one player noted “I felt like every turn…we were running out of time…it felt like in the chaos…you lose some value in the decision making process.” As the debrief unfolded, players agreed that this feeling of chaos is actually an accurate simulation of the high-pressure reality leaders will face. The game’s chaotic, time-pressured environment was a feature rather than a bug, that simulated the ‘fog’ of real-world policymaking, leading to rushed, imperfect decisions with cascading consequences.

Roads Not Taken: Questions for designing a game

Designing a game like Science 2030 requires balancing a huge number of parameters. Opening some doors inevitably closes others. We feel strongly about some of the choices we made, while others were simply a matter of going for the most promising of the available options.

1. Who is in the room?

It would be easier to run the game with fewer players and with less specific roles, but that would also sacrifice the nuances and realism that this diversity brings. When recruiting players, we sought to balance availability with expertise: junior experts were generally more available and brought priceless enthusiasm, while the mere presence of some senior leaders added significant value for all participants. One conclusion from the playtest was that expertise and experience are critical to the richness of the scenarios that players create, particularly for an untested game, and so we plan to invest more in participant recruitment for future iterations. From a practical perspective, assigning participants to roles that best leverage their expertise takes time, turning what might be akin to sending an event invite into more of a casting assignment.

2. How long should the game be?

At 5 hours, our game was a lengthy commitment. It could potentially be shorter, or more likely (a lot) longer. In choosing a duration, we faced a clear trade-off between player availability and the depth of the scenarios created. TSR’s previous experience running Intelligence Rising at varying lengths showed that it’s challenging to ensure sufficient immersion with games unless they last more than 3 hours. We did accommodate players who could only make a few hours, but opted for a half-day experience. Still, if the game was going to do true justice to the scope it attempted to cover, it would have been much longer: in the military, some wargames last for days or weeks.

3. What options are available to different teams?

A game must set rules about what different actors are “allowed” to do. Our game conceived of companies and the scientific community primarily as stakeholders that inform, support, or object to actions that governments take. This was a flattened representation of reality, made for simplicity’s sake. In turn 1, a risk-focused scientist lamented, “We were very keen on some of these precautionary approaches... but we have not had the opportunity [to implement them].” While the game accurately simulated a common feeling in the scientific community - intellectual influence but a frustrating lack of power to enact policy - we think we stripped out too much from the processes we seek to simulate.

A player on the science team articulated the issue perfectly: “one strange aspect is that [the game portrayed] the scientific community [as] kind of like tastemakers or something, but we don’t actually know anything [within the game] that anyone else doesn’t know.“ This turned out to be one of the key discoveries from the playtest: We should find a way for the scientific community and companies to directly drive the AI for science technologies that are part of the scenarios. This is something we’re excited to delve into in future design iterations of the game.

4. How open-ended is players’ action space?

We decided to go with a fairly ‘closed’ game, where players chose from a set of pre-specified interventions, or policy cards. Instead, we could have given players more freedom to create their own policies. Doing so would have obvious merits in terms of spurring creativity, but would place a very high burden on adjudicators to maintain the rigour of the exercise. They would have to constantly communicate to players which policy actions and cascading consequences were coherent and realistic contributions to the world model, and which were not.

Our team also felt that when most of the insight and expertise on a topic is highly concentrated, as is arguably the case with advanced AI systems, this calls for a more closed game. A closed approach allowed our team to inject policy options that were more disruptive or novel, and could stretch participant imaginations, but still make sense within the context of the game. To ensure we covered contrasting ideas and assumptions, we conducted pre-game workshops and interviews to inform our design. During the game, players also had space to share nuances on their policy proposals. This allowed us to constrain the game enough that players had a shared sense of purpose, while still allowing for discretion, judgement and creativity. Ultimately though, the optimal blend of closed and open decision-making remains an open design question.

5. How much leverage do players get over the wider world?

Related to the degree of openness, we thought about how much insight and control players should have over the broader world in which they are playing. It’s fun to play a game where everything can be controlled, but not necessarily realistic or fruitful. In practice, the resource intensive nature of the AI scaling paradigm and the existing geopolitical and scientific reality (where countries like the US and China have outsized roles) could lead to scenarios that leave some participants with very limited room to maneuver compared to others. While some countries do play an outsized role, our team took the view that it is important and interesting to explore the action space of middle power governments, as it is far from settled what they can achieve.

On the other hand, given time constraints, we made a strategic decision to downplay other ‘real-world’ constraints that normally affect policy decisions. For example in the debrief session, one player noted that the game didn’t include “the general public” beyond the advocacy of the science communicators and the background information that adjudicators included in their “state of the world” presentation. Another highlighted the need to model “misinformation“ and how policies can “easily become unpopular, or twisted“. Removing and downplaying these aspects created a ‘technocrat’s bubble’ where players could make what they felt were ‘optimal’ decisions while simultaneously recognising their real-world impossibility. Decisions like France’s AI nationalisation were strategically clean in the game but would be politically fraught in reality.

What’s next?

We plan to run additional Science 2030 games with more senior audiences and in different geographies in the coming year. We are also interested in bringing the merits of serious role-play games, such as encouraging more empathy between stakeholders and modeling chains of decisions, to other domains such as AI and education.

We are also considering potential design changes. One challenge with an AI for science game is how much to focus on ‘core AI’ developments and policies, versus those that are more specific to the ‘science’ ecosystem. In future iterations, we would like to go deeper on the latter. The current approach also treats policy interventions as discrete choices, giving little room for teams to adapt the proposed action or make choices about how to implement it better. There are clear reasons for this, but we are interested in exploring ways to add more room for nuance.

Finally, we are curious about the potential role that AI itself could play in designing and implementing games like this. Recent breakthroughs like Concordia show how LLMs can model human agents and create nuanced scenarios. We are excited about the potential to use AI to create novel or unappreciated feedback loops between the policy actions that players take and the simulated worlds that result. If you have ideas or work planned in this space, please reach out at aipolicyperspectives@gmail.com.

The authors would like to thank colleagues at Google DeepMind and external participants for participating in the elicitation sessions and early playtests. Their engagement, feedback and openness were central to this project. Thank you in particular to: Sara Babahami (UK Government Office for Science), Anastasia Bektimitova (the Entrepreneurs Network), Charlie Bradbury (Deloitte), Uma Kalkar (OECD, GovAI), Nick Moës (The Future Society), David Norman (Cooperative AI Foundation), Alex Obadia (ARIA), Toby Ord (Oxford Martin AI Governance Initiative), Velislava Petrova (Centre for Future Generations), D Max Reddel (Centre for Future Generations), Pranay Shah (ARIA), Nicole Wheeler (ARIA).