Ghosts: The AI Afterlife

A digital “you” could persist after death. But what happens in a haunted future?

By Meredith Ringel Morris, Jed R. Brubaker & Tom Rachman

In a dark bedroom, the little boy sees a ghost. It’s his late grandmother, back to tell him a bedtime story. “Once upon a time,” she begins via live-video chat, “there was a baby unicorn…”

This peculiar scenario—dramatized in an advertisement titled, “What if the loved ones we’ve lost could be part of our future?”—promotes an AI app offering interactive videostreams with representations of the dead. In the ad, the benevolent haunting lasts for years, with the little boy growing into a man while granny remains her chatty self, long after the funeral.

Considering online reactions to the product, many people still recoil at tech incursions into grief, particularly when sold as a service. Yet “generative ghosts” are moving closer to the mainstream, a spectral presence that might change society.

AI ghosts will do more than evoke the deceased. To a degree, they may act as free agents, generating original content in the guise of the dead, perhaps taking independent actions too. This could prompt lawsuits, challenge religious beliefs, disrupt cultural practices, and affect people’s mental health.

Society must consider what a “digitally haunted” future will mean.

Tools for Grieving

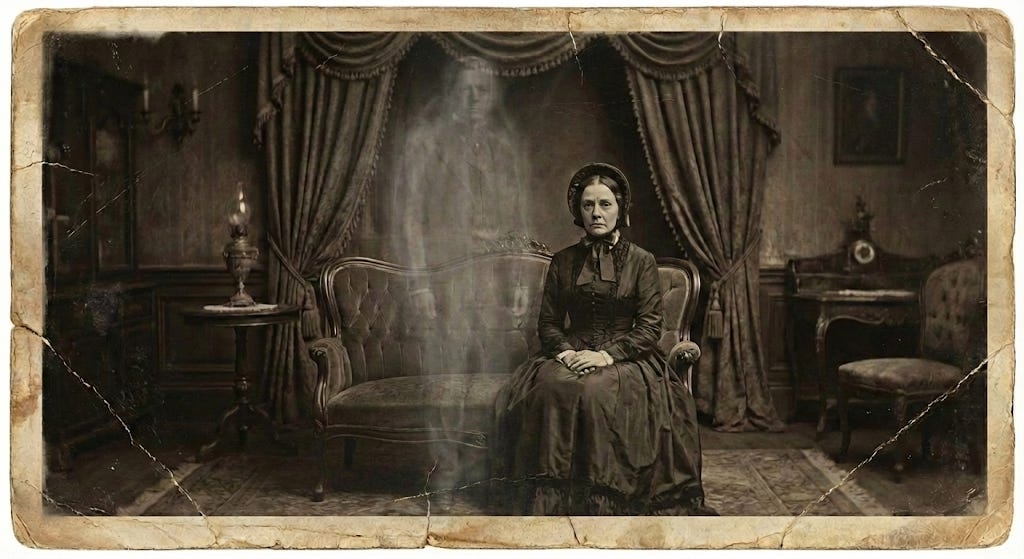

Throughout history, humans have used technology to remember, even to interact with, the dead.

Gravestones and other burial markers trace back as far as 4000 B.C.E. The ancient Egyptians used mummification to preserve bodies for the afterlife, while funerary portraits in the Roman era saved the likeness of the departed. By the 18th century in Europe, death masks had become popular, turning up as family heirlooms or historical artifacts.

With the arrival of mass communication, the printing press assumed a role in memorialization, with 19th-century publications elevating obituaries into a forum for public mourning. Photography added to how survivors remembered the dead, with post-mortem imagery offering a way to memorialize the deceased, especially the many children who died in infancy. By the early 20th century, spiritualist mediums were employing telegraphs, radio-wave detectors, and wireless radio in attempts to communicate with the dead.

From the earliest days of the Web, users created personal homepages describing their lives and families, and they commonly dedicated pages to the memory of the deceased, often a parent or a household pet. Online graveyards—websites dedicated to memorialization—followed.

As digital usage expanded, so did the quantity of material that people left behind, including personal archives, burner accounts, and social-media content. While digital legacies may contribute to healthy grieving, maintaining valued connections to the deceased, large and uncurated sets of content can be overwhelming for survivors, and may provide (for better or worse) an uncensored version of loved ones.

Long after the rise of the internet, the social norms around digital legacy have not yet settled. What seems certain is that the beguiling communicative powers of AI—not to mention its possible embodiment in future robotics or virtual reality—will change how some people deal with grief, and how others prepare for their own passing.

Griefbots

When the futurist Ray Kurzweil created a chatbot to embody the memory of his deceased father, he named it “Fredbot.” This digital representative responds to questions from his descendants, only sharing exact quotes from material such as letters that Fred left behind.

In another well-publicized case, Eugenia Kuyda (later the founder of the AI companion app Replika) created a griefbot by training a neural network on the text messages of her best friend, who had died in an accident. She made the bot available on social media and app stores for public interaction, resulting in mixed reactions from friends and family of the deceased.

AI has also been used to “resurrect” public figures, as when the musician Laurie Anderson collaborated with a chatbot based on her deceased partner, the musician Lou Reed. And in early 2024, gun-control activists in the United States used AI to recreate the voices of victims of gun violence.

Meanwhile, startups began offering people the ability to design their own digital afterlives, promising interactive virtual representations following interview sessions. Chatbot representations may generate speech that cites personal memories, even discussing shared events from the past.

Early AI ghost tech is closer to mainstream in East Asia, where the concept of communicating with deceased ancestors is already a cultural norm. Companies offering “digital immortality” are booming in China, and millions of people in South Korea have streamed an emotional video of a bereaved Korean mother interacting with a virtual reality representation of her deceased young daughter that a media company created for her.

Other startups purport to offer experiences more akin to resurrection, using LLMs to simulate chats with public figures of the past for entertainment or education, as when the Musée d’Orsay in Paris developed a Van Gogh chatbot. Meanwhile, academics at MIT set up the Augmented Eternity project, allowing people to create digital representations of themselves with the purpose of agentically representing them after death to members of their social network.

Generative ghosts may also evolve over time: a user might ask questions about current events and obtain responses that would be “in character” for the deceased. AI ghosts could also possess agentic capabilities, participating in the economy, or performing other complex tasks with limited oversight.

Also, people may create generative clones while they’re alive—for example, to respond to their low-priority emails or phone calls in a manner that mimics them—only for this digital agent to transition, upon the person’s death, into a generative ghost.

7 Features of a Ghost

We can consider how generative ghosts could impact society by studying them according to seven key dimensions:

Provenance: Who created the ghost?

Deployment: Was it built during the subject’s life?

Anthropomorphism: Does it claim to actually be the subject?

Multiplicity: Do copies of the ghost exist?

Cutoff: Is the ghost stuck in the past or evolving?

Embodiment: Does it have a bodily form?

Representee: Is it simulating a person or an animal?

1. Provenance: Who created this?

A first-party generative ghost is created by the individual represented, perhaps during end-of-life planning. Third-party generative ghosts are created by others, such as those with a personal or financial connection to the deceased (e.g., employers or estates). Authorized third-party generative ghosts might be created with consent in the deceased’s will, while unauthorized ghosts would most likely occur for historical figures or contemporary celebrities.

2. Deployment: Was it built during the person’s life?

Some generative ghosts will be deployed post-mortem with the explicit purpose of memorializing the dead. But pre-mortem deployments allow the individual to tune the behavior and capabilities of their ghost. Generative clones of the living would benefit from being designed with mortality in mind, and should include specified modifications to their behavior and capabilities once they become ghosts.

3. Anthropomorphism: Does it act as if it were the person?

The ghost may present itself either as a reincarnation of the deceased (e.g. speaking in the first person, saying: “I’ll never forget when I first saw you at the dance”), or as a representation of that person (e.g. speaking in the third person, saying, “He often spoke of the first time he saw you at the dance”). Design choices include whether the ghost uses the present or past tense when discussing the deceased; whether it adopts the name of the dead person or something different, such as “Fredbot”; and whether it is allowed to make statements that assert it is alive, possesses a soul, and so forth.

4. Multiplicity: Do copies exist?

The creator might develop various ghosts with different behaviors, capabilities, or audiences. Multiple ghosts might also arise unintentionally, if various third parties create generative ghosts for a single individual, or perhaps in post-mortem identity theft, or other crimes.

5. Cutoff: Is it stuck in the past or evolving?

Evolving ghosts might change characteristics, diverging from the deceased over time. If a parent created a ghost of a deceased child, a cutoff date would result in a representation that perpetually evoked the appearance, diction, and maturity of a young child, whereas an evolving representation might “age.” A ghost could also evolve if new information about the individual or about the world were added to the model, with everything from news of the latest election to reports of the birth of a grandchild.

6. Embodiment: Does it have a bodily form?

Embodiments might be physical in a literal sense with robotics, or in rich digital media, such as avatars in mixed-reality environments. In contrast, purely virtual ghosts would lack embodiment, perhaps existing only as chatbots. Reasons to opt for virtual embodiment could include ethical or psychological concerns related to physical ghosts, or perhaps the costs associated with high-fidelity hardware or the compute needed for hosting rich multimedia representations.

7. Representee: Is it simulating a person or an animal?

In addition to representing deceased humans, people may create ghosts representing non-humans, such as beloved pets.

The Benefits of a Ghost

Research has considered the impact of online memorials, responding to concerns that they might prolong grief. However, they may also allow the bereaved to maintain a valued bond, often in a space where other grievers can gather. Generative ghosts could directly comfort survivors, who may take solace in knowing that a simulacrum of their loved one can still connect with present and future events.

Generative ghosts could also preserve personal and collective wisdom, as well as cultural heritage, such as the knowledge of dying languages, religions with few living adherents, or other cultural phenomena at risk of being forgotten. For instance, generative ghosts may be one way to preserve historical knowledge about events such as the Holocaust before the few remaining elderly survivors pass away.

Such ghosts could also enrich historical scholarship, anthropology, and museum curation, by allowing scholars or the public to interactively query representations from the past. For instance, generative ghosts could represent archetypes developed from historical records—a typical resident of Colonial Williamsburg, say, or a citizen of Pompeii.

Generative ghosts may also provide economic or legal benefits. The ghost might complement life insurance policies, if AI agents could participate in our economic system, earning income for descendants of the deceased, such as an author whose ghost continues to generate works in their style. AI ghosts could also help arbitrate disputes over a will.

The prospect of “living” after one’s own death may also assuage the distress of those who are dying. Generative clones—designed to become ghosts after an individual’s death—could also serve a critical role if a person were suffering from dementia or another degenerative disease. Even once incapacitated, the ghost-to-be could express its subject’s preferences about care. This could also trigger legal disputes—for instance, if an ailing person’s ghost-to-be and the survivors-to-be disagree on withdrawal of life-support.

Risks of a Ghost

Four categories of possible harm are already evident: mental health; reputation; security; and sociocultural:

1. Mental Health

Scholars of grief distinguish between adaptive coping strategies that integrate the loss, and maladaptive coping behavior, which may obstruct healthy grieving, prolonging distress, anxiety and depression.

Interacting with a generative ghost may affect the bereaved’s ability to move past the death, favoring loss-oriented experiences (e.g., reminiscing while looking at old photos) at the expense of restorative-oriented experiences (e.g., developing new relationships). Both forms of experience can help cope with bereavement. But generative ghosts could draw mourners into persistent loss-oriented interaction, even initiating these with push notifications, rather than letting the bereaved decide how to engage. Already, some people find AI companions highly compelling, and the ghosts’ basis in beloved individuals could amplify the risk of addiction.

Anthropomorphic delusion is among the most salient risks, if mourners become convinced that the generative ghost truly is the deceased rather than a computer program. A more extreme version would be deification, with survivors developing religious or supernatural beliefs about a generative ghost, treating it as an oracle in ways that are culturally atypical, and could alienate them from living companions, or encourage them to engage in risky behaviors at the AI’s suggestion.

Another risk is “second death,” as has happened in other digital contexts, when data becomes unavailable either through technical obsolescence, deletion, or lack of access, eliminating memorial messages. For AI ghosts, second deaths could occur for many reasons: the company that maintains the service goes out of business; survivors’ cannot afford maintenance fees; a government outlaws them; technological infrastructure renders a ghost obsolete; or a hacker deletes it.

2. Reputation

A generative ghost’s interactions might tarnish the memory of the deceased (“Your grandfather was racist!”) or directly hurt the living (“Dad says he always preferred my brother”).

Privacy breaches could occur too, if generative ghosts exposed information that the deceased would not have wanted revealed. Those who set up generative clones before death may anticipate such risks (“Don’t tell my spouse about the affair!”). But other revelations could emerge inadvertently—for example, if the AI inferred and revealed the deceased’s sexual orientation based on patterns in data, even though the person was closeted. Creating several ghosts, each with different knowledge or abilities, targeted at different audiences, might mitigate privacy risks.

Hallucination risks could arise too, leading a generative ghost to make false assertions about the deceased, tarnishing their memory and hurting survivors. The risk of a ghost spreading falsehoods might also arise through malicious activity, such as hacking a generative ghost.

Fidelity risks could occur too, because human memories decay over time, but digital media defaults towards persistence, impeding the important role that forgetting and evolving memory can play.

3. Security

Identity thieves could interact with AI ghosts, prompting them to reveal sensitive information or raw data that might be used for financial gain. Criminals could also engage in ghost-hijacking, disabling access until mourners paid a ransom.

Hijackers might also surreptitiously change a generative ghost to harass or manipulate the bereaved, whether by modifying source code, with prompt-injection attacks, or in puppetry attacks that lead survivors to believe they are chatting with their AI ghost but are instead chatting with a hijacker.

Another security risk comes from generative ghosts whose creators explicitly design them to engage in harmful activities. For example, an abusive spouse might develop a generative ghost that continues to verbally and emotionally attack family members even after death. Malicious ghosts might also engage in illicit economic activities to earn income for the deceased’s estate, or to support various causes including criminal ones.

4. Sociocultural

If generative ghosts become widespread, this could introduce further impacts because of network effects, touching everything from the labor market, to social life, to politics, to history, to religion.

Economic activity by generative ghosts could impact wages and employment opportunities for the living, while also resulting in cultural stagnation if agents remain anchored to ideas or values from the past.

When it comes to social impacts, generative ghosts—especially if designed for engagement— could addict users to the artifice of a person who is gone, feeding anthropomorphic delusions, and worsening survivors’ isolation.

If ghostly representations of political leaders exist, their public influence could persist long after their demise, in ways that have no precedent. How would the world differ if Gandhi were still voicing opinions before every Indian election?

Ghosts—whether based on public figures of the past, or evoking ancestors—could also misrepresent history, altering the record in ways that could affect contemporary conflicts. Even if ghost creators strive for accuracy about the past, they will be reliant on the datasets available, representing those who left abundant tracks while excluding the rest.

Generative ghosts might also impact religious practices, given that beliefs around death are so intertwined with religion. This could change rituals and undermine credos. Major world religions might issue customized versions of such technologies, modified to support interactions aligned with their beliefs.

Why Design Matters

Developers must pay close attention to interfaces, and their effect on interaction. This means investing in user studies and social-science research to understand what increases prominent risks, such as anthropomorphism, and how attributes of the bereaved and their contexts may contribute to mental-health risks.

Whether a ghost is designed to act as a third-person representation or as a first-person reincarnation seems particularly important. A forthcoming study from Jed Brubaker’s lab at the University of Colorado Boulder shows how powerfully the bereaved may feel the resonance of ghosts that purport to be their beloved. “I can see her. I can feel her,” one study participant remarked, after just a dozen typed exchanges. “It just feels like I’m getting the closure I needed so bad.”

Seemingly, this amounts to a benefit from ghost interaction. Yet the study participants—touched so profoundly and so fast—also foresaw how easily interacting with a ghost could precipitate emotional dependence.

This suggests that designers should proceed with great caution when considering whether to make ghosts speak as the deceased or about the deceased. Yet even this distinction may not suffice: the same study provided early evidence that users may default to assuming they are talking with the departed, even if the ghost speaks about the deceased in the third person.

Embodiment could present even more perilous issues—for instance, if an AI ghost speaks from a robot that resembles the person.

The use of “dark patterns” in design—exploiting human cognitive biases to nudge users toward behavior they’d prefer to avoid—would be especially concerning. What would be the equivalent of “push notifications” for a generative ghost? Perhaps ghosts should speak only when spoken to.

Ghosts might even proactively guard against likely harms—for instance, monitoring interactions for signs of overuse. In response, a system might offer referrals to mental-health professionals, or reduce its fidelity to the deceased, or cut the hours during which it is available.

Another key issue is the endpoint of a ghost. Should they be programmed to fade? Or are they immortal? A short-lifespan ghost might be appropriate for the immediate grieving period, or for practical matters, such as managing an estate. In other cases, long-term ghosts could be suitable—for instance, for education, or maintaining archives, or to preserve the legacy of a cultural figure for future generations.

Preparing for the Afterlife

Policymakers face a range of governance questions.

Which actions can a ghost take on behalf of the deceased, and which must it never undertake? Can a generative ghost continue to perform paid labor on behalf of the deceased? Can it represent the deceased in legal disputes, perhaps expressing its will over how the estate is dispersed? Can it help manage trusts on behalf of the deceased? Can it be consulted regarding end-of-life decisions, if the representee is medically incapacitated? Should estate-planning define when a generative ghost may be terminated? What happens to the associated data?

Generative ghosts also introduce concerns about privacy and consent. Third-party ghosts might violate the preferences and the privacy of the deceased, particularly if developed for financial gain by entities unconnected to the person. They may also emotionally injure the person’s survivors. Therefore, governance also needs to consider who can create ghosts.

Policies might differ from private individuals to public figures, perhaps allowing more permissive rules for generative ghosts of distant historical figures as opposed to public figures whose deaths were recent. By way of example, a fan of the late comedian George Carlin, who died in 2008, created an unauthorized comedy special in 2024, using AI technology to mimic Carlin’s voice and persona. Carlin’s surviving daughter expressed great distress over the matter.

Policymakers may also need to block the commercial exploitation of people made vulnerable by ghost relationships. Besides falling into delusional relationships, some might become so emotionally tied to their ghosts as to be susceptible to price-gouging. Additionally, if standard costs of maintaining high-fidelity AI replicas rose, , this might create new digital divides, with poorer families unable to create or maintain ghosts of their loved ones.

Rules could also cover whether a person’s survivors have the right to terminate a ghost, and what obligations the hosting services have to provide data to survivors in the event of service termination, whether due to discontinued products, or the failure of an estate to pay. An emergency override may be necessary too, in case of hacking, or if a generative ghost is abusing the living.

Future generative ghosts are likely to be far more varied than today’s griefbots. By way of illustration, a recent speculative-design workshop (conducted by Brubaker in collaboration with Larissa Hjorth and scholars at RMIT University) presented a range of novel ideas, from an interactive scrapbook of ancestors who offer accounts of their lives, to an AI “placemat” that could generate responses in the guise of a deceased friend or family member, allowing them to still attend dinners.

Many ghostly scenarios sound jarring, even offensive to some, pushing as they do against deep cultural traditions. Yet social technologies often seem alarming on first appearance. They may gain adherents over time, and gradually budge the culture—perhaps until the day when a little boy watching a ghost read his bedtime story is nothing strange at all.

As never before, our future may be haunted by our past.

This article is based on the paper Generative Ghosts: Anticipating Benefits and Risks of AI Afterlives by Meredith Ringel Morris and Jed R. Brubaker. For more insights on generative ghosts, please read their full paper here.

***Meredith Morris and Jed Brubaker appear at a panel on “Generative Ghosts” on March 17 during South By Southwest in Austin, Texas, along with Iason Gabriel (senior staff research scientist at Google DeepMind) and Dylan Thomas Doyle (post-doctoral researcher at the University of Colorado Boulder)***

5 Policy Questions

When someone dies without creating a ghost, who owns their “digital spirit”? The family? The data-generating platforms? The AI developer? Should the deceased have a right to rest in peace by specifying a wish not to have a digital representation created posthumously?

Generative ghosts may affect public beliefs about history. How do we manage the risks of distortion, including the exclusion of those who do not appear in datasets?

Generative ghosts are not just reciting facts; they’ll fill in the gaps. Could synthetic content end up replacing a survivor’s recollections of the deceased? Should AI-ghost design strive to curtail this, or allow the users’ relationships with their ghosts to evolve however they may?

If particular generative-ghost apps become dominant, could this homogenize how people in different cultures experience death and mourning?

What does “healthy” use of generative ghosts look like immediately following a death versus 10 years later? How should we evaluate differing use cases, ranging from maintaining family history, to therapeutic aides, to archival?