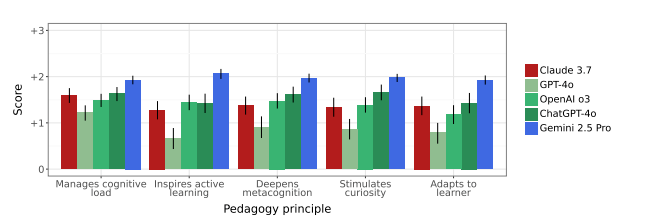

Today’s post comes from Daniel Gillick, a research scientist at Google DeepMind, who works on making Gemini more useful for teaching and learning. Daniel explores five pedagogical principles that the team is using in their work, the degree to which today’s AI systems can embody them, and what that means for how we should think about AI tutors. As with all the pieces you read here, it’s written in a personal capacity.

Artificial Intelligence will no doubt reshape many of society’s institutions and industries, but this shift has come quickly to education. Sharply increasing student use across all grade levels, a crisis in assessment, skepticism of the benefits, and concerns over data privacy and safety have created turmoil across the sector. Still, a longer-term view provides cause for optimism, as AI has the potential to streamline the logistics of teaching and enable more effective learning.

The most mainstream hope for AI in education is the possibility of a personalised tutor for every learner. This vision has a long history, dating back at least to Benjamin Bloom’s 1984 paper, which summarised the significant advantage of individual human tutoring over traditional classroom learning, as observed in studies run by his students. While the scale of Bloom’s purported ‘2-sigma’ effect is generally unrealistic, high-quality, high-dosage human tutoring has, unsurprisingly, proven quite effective. As AI systems have developed into compelling interlocutors, the prospect of scaling this tutor effect has captured public imagination (and sparked a rush on VC-funded EdTech).

Here, I examine how AI tutors may differ from human tutors, and their relative strengths and weaknesses, to bring the vision into sharper focus.1 I note at the outset the pedagogical precondition of intersubjectivity, or the “possibility of trading places” – which casts some shade on the prospect of a non-human teacher. Or as Rousseau put it in Emile: “Remember you must be a man yourself before you try to train a man”. With this context, let’s review the five key principles of learning science we have prioritised in developing and evaluating AI systems for tutoring.

Principle #1: Inspire active learning

Let’s start with active learning. The core idea, as expressed by the American educational reformer John Dewey, is that people learn best by doing. AI systems can transform more passive learning, like reading a textbook chapter on gravity and acceleration, into an engaging dialogue or interactive activity. A learner could start with a motivating question such as “how long does it take for a raindrop to fall from a cloud?”, and work towards a calculation by conversing with an AI tutor that is trained to provide support, create diagrams, and generate simulations, rather than just give direct answers. This could help learners spend less time feeling stuck and more time in the educational sweet spot where they get just enough guidance to progress—what the Russian educator Lev Vygotsky called “the Zone of Proximal Development.”

But active learning only works if learners are motivated to engage. Often a human tutor not only provides the activity, but serves as a supportive partner. The learner’s motivation to engage in active learning is rooted in the social dynamics of their relationship with the teacher, including their trust in the teacher’s process and the social pressure to answer their questions. Without these incentives, questions like “why do you think that’s the right answer?”, fall flat as learners are well aware that the relationship with an AI system is more transactional.

Principle #2: Manage cognitive load

Cognitive Load Theory, developed by the psychologist John Sweller in the 1980s, is based on the limitations in our working memory and our ability to process sensory information. It highlights that educators need to present materials in a way that focuses students’ cognitive resources on what matters most.

In experiments, Sweller found that novice learners often tried to solve problems in new areas through trial and error, rather than by learning more useful patterns. In such situations, providing a “worked example”—a step-by-step demonstration of how to solve a problem—can create new mental pathways that deepen with practice. LLMs can provide learners with reasonable worked examples and practice problems. But knowing when each of these moves is pedagogically appropriate remains very tricky. A good human tutor may use surprise to their advantage, suggesting a related problem to show a specific point. However, an AI tutor is less adept at developing a working model of the learner, and thus may be better served through predictability: being steady and prosaic rather than confusing and capricious.

Cognitive Load Theory also considers the optimal style and medium of education materials. Current AI systems are prone to generating long, overly comprehensive responses, which can impede learning. This is partly due to optimising for user feedback from a “single turn” engagement, rather than the “multi-turn” interactions that learners will actually experience. However, progress is underway on addressing this challenge as well as other ways to offer more natural conversation, for example by applying principles of “chunking” and “progressive disclosure” to break up how information is shared with learners.

AI could also help address the split-attention and modality effects that impede learning by presenting education materials to learners in a confusing way. For example, instead of providing a diagram about a new concept far from the pertinent text, AI systems could generate self-contained, properly-annotated diagrams that augment a text-based explanation rather than divert learners’ attention from it. As image and video generation improve, educators will be able to go further, turning a textbook image of a combustion engine into a simulation that a learner can directly manipulate and interrogate.

Early prototypes like Project Astra demonstrate this ability to move beyond text, but the experience is still a long way from the richest learning experiences, such as when a tutor and a learner share a whiteboard to work through a problem.

Principle #3: Adapt to the learner

This brings us to adaptation, which traces its roots to Johann Comenius, a Reformation-era Protestant teacher, who felt that “learning proceeds sequentially”, and thus advocated for a schooling system that allowed for individualised rates of progress. In the 20th century, Maria Montessori turned this idea into a comprehensive method where the “teacher as guide” carefully observes each child, encourages agency and self-directed learning, and suggests activities appropriate to their interests and abilities.

The idea of using AI to personalise learning predates the LLM era. Intelligent Tutoring Systems date back to the 1970s and have long offered personalised pathways through learning materials. Now, LLMs have memorised more than any individual ever could and can keep an increasing amount of information in their context, a kind of super-precise memory, that offers the potential for far more detailed personalisation.

This personalisation could include relevant details about an individual learner, past conversations, or curriculum material that make the AI system’s language and notation more relevant to them. But there is a fine line between useful personalisation (for example, that feedback for a fourth-grade essay should use age-appropriate language) and annoying emphasis on irrelevant details (for example, using a learner’s enthusiasm for basketball to turn all statistics practice-problems into questions about field-goal percentage).

Personalisation in AI systems will continue to improve. But part of the implicit value in personalisation by human teachers is in the relationships they build with learners, with mutual trust and respect. At best, these are thorny issues for AI systems, which cannot offer any true empathy. In practice, an AI tutor may be more successful by instead offering transparency about the reasons for its personalised suggestions, rather than assuming a learner will readily hand over control.

Principle #4: Stimulate curiosity

In many ways, harnessing students’ curiosity is a precondition for the other pedagogical principles. The psychologist Daniel Berlyne saw curiosity as a conflict or incongruity in our minds, triggered by encountering something novel, complex or surprising. George Lowenstein argued that curiosity emerges when we perceive a gap between what we know and what we want to know. And Janet Metcalfe suggests that we are most curious about things that we feel are learnable, or on the verge of understanding.

By virtue of their unlimited availability, AI systems can help learners leverage their natural curiosities to achieve deeper and broader knowledge. Still, for many learners, especially younger ones, human teachers play a crucial role in bridging the gap between the curriculum—and its promise of future utility—and learners’ more immediate interests. This bridging is likely the product of some personalisation, but also shared enthusiasm, which learners will tend to find disingenuous from an AI system, or worse, as sycophantic behaviour.

Instead, AI systems could rely on their ability to help learners see and create new things, for example through coding, dramatically lowering the barriers to self-directed creativity. Rather than trying to stimulate creativity by emulating human emotions (“wow, what a great idea!”), AI systems may also benefit from leaning into what many learners feel is their greatest characteristic: their non-judgmental feedback.

Principle #5: Deepen metacognition

Finally, let’s consider the role of metacognition—reflecting on the process of learning to solidify new ideas or highlight what was most helpful. Although the broader idea dates back much further, the developmental psychologist John Flavell coined the metacognition term in the 1970s after studying how learners completed memory tasks—for example, noting how some older learners had developed strategies, like repeating things to themselves, to practice.

Typical metacognitive exercises—reflecting on learning goals and strategies, explaining thought processes out loud, or teaching a concept back to a teacher or peer—are inherently social and thus tend to run afoul of the relational limitations of AI systems. Many learners will not have the patience to engage so deeply with a system that can’t really ‘listen’ in a human sense, even if the opportunity to do so would be valuable.

So while AI systems can help with ‘sense-making’, the practical task of understanding how things work, they are less likely to help with the personal process of constructing a lasting identity as a learner, what the developmental psychologist Robert Kegan calls ‘meaning-making’. The richness of human connection is often instrumental to such formative experiences.

_________

Stepping back to compare human and AI tutors from a distance, AI certainly has some advantages. It is always available, knows a lot, is non-judgmental, and can enable creativity. Its limitations are mainly as a social partner, which tend to render some elements of traditional pedagogy less appealing for learners. Thus, in developing AI tutors, I think that the north star is not an excellent human tutor, but something a bit different. These learning science principles for AI tutors still need to be explored and refined.

Lastly, it is perhaps not necessary, but worth stating nonetheless, that at some deeper level, the ultimate work of Teaching is about how to be a human being, cultivating intellectual and moral agency, a job uniquely suited to other human beings. AI systems are powerful tools which we hope can be effectively leveraged in support of more universal, more equitable Learning.

Thanks to the following individuals for helpful feedback. Kevin McKee Markus Kunesch Brian Veprek Irina Jurenka Julia Wilkowski Yael Haramaty Miriam Schneider

This is a tricky essay to write. In an attempt to be brief and focused, I have necessarily glossed over much of the rich history and research about the science of learning. I have also not touched on the many forms of learning beyond one-on-one tutoring. AI research continues to move at breakneck pace, and so reflections on what AI can do, and how people will engage with it, are inevitably short-term in nature. I hope that by writing this, I create a bit more space for intentional discussion of the design and use of AI tutors.

This is super interesting, thanks so much.

I realise you said that this is a brief article and doesn't include everything, but I had a question on your curiosity principle.

On curiosity: I think it's interesting that you pick this out as a principle. As a former teacher I found motivating students to be the most important part of the job (and this has probably been the main barrier to previous edtech movements succeeding in supporting students who are hardest to reach, in my opinion). I think curiosity is a way into motivation. Specifically what are your thoughts on AI tutors supporting students to make connections? I have been inspired by Zurn and Bassett's work where they argue 'curiosity is edgework'.

Very interesting essay! The discussion of the five principles seems to suggest that the breakthrough has not yet been realized - as AI lacks empathy, emotional sensitivity, and is prone to hallucinations. Early examples (such as the Alpha Schools in the U.S) already show the limitations of AI in education.

Although the challenges already associated with AI are briefly mentioned, the essay does not address how these could be mitigated in the future.